Courtesy Julien Tromeur, Via Unsplash

If you use large language models (LLMs) like ChatGPT, Gemini, or Meta AI, you’ve likely wondered how to get more meaningful responses. Today’s AI tools rely heavily on prompts, which are instructions given to LLMs in text form. This process of crafting and inputting instructions is called prompt engineering. Essentially, prompt engineering is about asking your LLM the right questions to get accurate and meaningful responses.

What Makes Prompt Engineering Powerful?

Large Language Models (LLMs) are AI programs trained on vast amounts of text from books, websites, and other sources. This training enables them to recognize patterns in human language and predict the best words to use in a given sentence. As a result, LLMs can perform various tasks, such as answering questions, summarizing documents, creating marketing content, and even translating languages. Despite their capabilities, LLMs have limitations. One issue is bias, which can lead to the adoption and repetition of stereotypes present in existing data. Another problem is hallucinations, where AI generates information that sounds real but is factually incorrect. Additionally, while LLMs can analyze words extensively, they often lack the intuition to understand human emotions or real-world experiences.

How to Ask AI the Right Questions

To get accurate answers from AI, you need to ask the right questions in the right way. To do this effectively, be certain about what you want from AI before asking a question. Provide background information to help the AI understand the context of your question. Specificity is crucial; provide details such as dates, locations, or technical terms to get more accurate answers.

Refining Your Prompts for Better Results

Using AI, such as Large Language Models, may require several attempts to get the desired response. The best approach is to refine your question through an iterative process. Start with a basic question, review the AI’s response, adjust the question, and repeat the process until you get a satisfying answer.

Using Examples to Guide AI (Few-Shot Prompting)

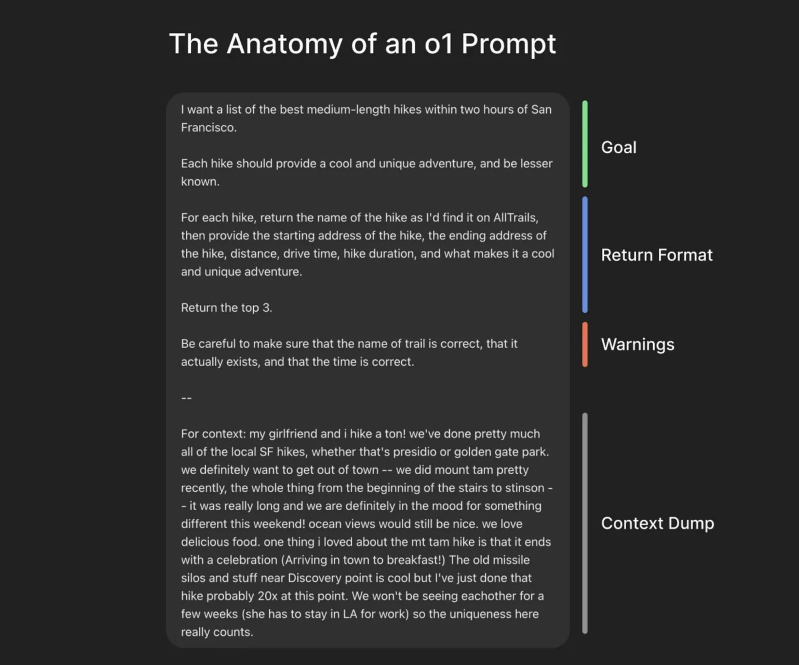

Another effective way to improve AI responses is to use the few-shot prompting approach. Provide examples or a template for the task to enable the AI to follow the same pattern. For instance, if you need AI to write an email in a certain style, give it an example of the email, and the AI will try to replicate that style in its response. Check out an optimal prompt structure for ChatGPT, as recommended by Dan Mac and retweeted by OpenAI’s President Greg Brockman:

Conclusion

Learning to use AI effectively is like learning a new language. Prompt engineering helps us get the most out of AI by asking the right questions, refining our requests, and guiding the AI to produce accurate responses. As AI becomes increasingly important in everyday life, knowing how to communicate with it properly will help us work faster and efficiently. At the end of the day, an LLM is only as smart as the prompts.